using SegyIO, HDF5, PyPlot, JUDI, Random, LinearAlgebra, Printf, SlimPlotting, SlimOptim, StatisticsFWI with user provided misfit function

In this notebook, we will introduce how the loss (data misifit) can be modifed to a user chosen function for seismic inversion. We will use one of JUDI’s FWI example as a skeleton and illustrate the losses available in the package and how to input a custom data misfit function.

Single source loss

In JUDI, the data misfit function is defined on a single shot record basis (single source experiment) and will be automatically reduced by julia’s distribution for multiple sources. Therefore, the misfit function is extremly simple and should have the form:

function misfit(dsyn, dobs)

fval = ...

adjoint_source = ...

return fval, adjoint_source

endWhere fval is the misfit value for a given pair of synthetic dsyn and observed dobs shot records and adjoint_source is the residual to be backpropagated (adjoint wave-equation source) for the computation of the adjoint state gradient. For example the standard \(\ell_2\) loss (default misfit in JUDI) is defined as

function mse(dsyn, dobs)

fval = .5f0 * norm(dsyn - dobs)^2

adjoint_source = syn - dobs

return fval, adjoint_source

endWith this convention defined, we will now perform FWI with a few misfit functions and highlight the flexibility of this interface, including the possibility to use Julia’s automatic differentiation frameworks to compute the adjoint souce of misfit functions difficult to differentiate by hand.

Inversion setup

# Define some utilities for plotting

sx(d::judiVector) = d.geometry.xloc[1][1]sx (generic function with 1 method)We load the FWI starting model from the HDF5 model file and set up the JUDI model structure:

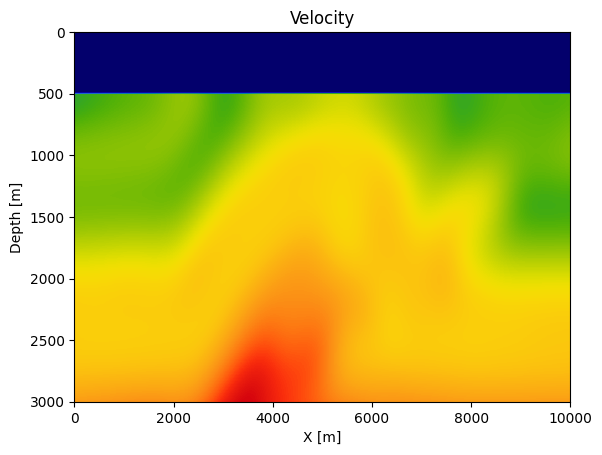

m0, n, d, o = read(h5open("overthrust_model.h5","r"),"m0","n","d","o")

model0 = Model((n[1],n[2]), (d[1],d[2]), (o[1],o[2]), copy(m0))Model (n=(401, 121), d=(25.0f0, 25.0f0), o=(0.0f0, 0.0f0)) with parameters (:m, :rho)plot_velocity(m0'.^(-.5), d)

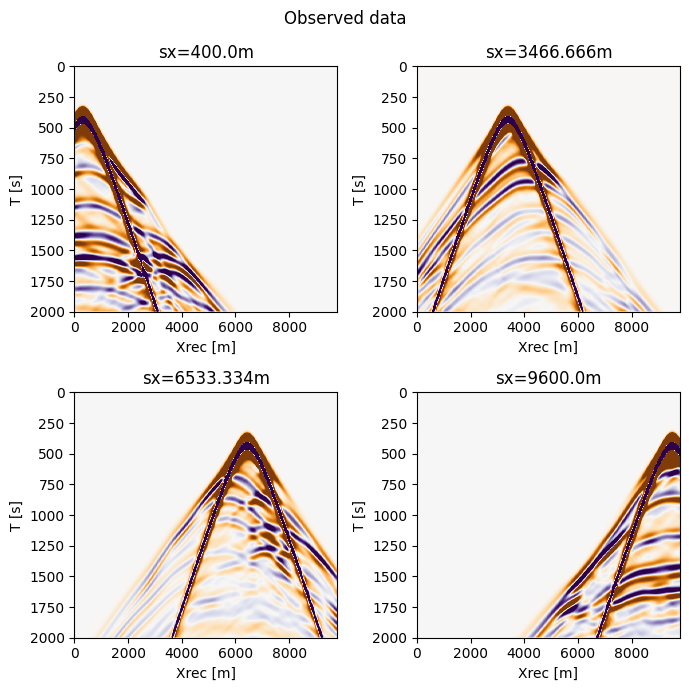

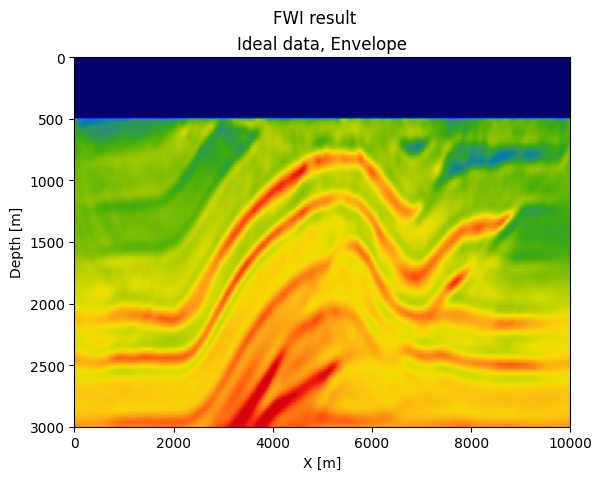

Then we read the SEG-Y file containing our test data set. The data was generated with a 2D excerpt from the Overthrust velocity model and consists of 31 shot records with 2 seconds recording time. We load the data and set up a JUDI seismic data vector:

block = segy_read("overthrust_shot_records.segy");

d_obs = judiVector(block);┌ Warning: Fixed length trace flag set in stream: IOBuffer(data=UInt8[...], readable=true, writable=false, seekable=true, append=false, size=7076688, maxsize=Inf, ptr=3601, mark=-1)

└ @ SegyIO ~/.julia/dev/SegyIO/src/read/read_file.jl:36Since the SEG-Y file contains the source coordinates, but not the wavelet itself, we create a JUDI Geometry structure for the source and then manually set up an 8 Hz Ricker wavelet. As for the observed data, we set up a JUDI seismic data vector q with the source geometry and wavelet:

src_geometry = Geometry(block; key="source");

src_data = ricker_wavelet(src_geometry.t[1], src_geometry.dt[1], 0.008f0);

q = judiVector(src_geometry, src_data);figure(figsize=(7, 7))

suptitle("Observed data")

subplot(221)

plot_sdata(d_obs[1]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[1]))m")

subplot(222)

plot_sdata(d_obs[6]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[6]))m")

subplot(223)

plot_sdata(d_obs[11]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[11]))m")

subplot(224)

plot_sdata(d_obs[16]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[16]))m")

tight_layout()

Misfit functions

With the data and model defined, we can now define the misfit function we will be working with. For some of the considered functions, we will modify the observed data to highlight properties of a given misfit.

Predefined misfit

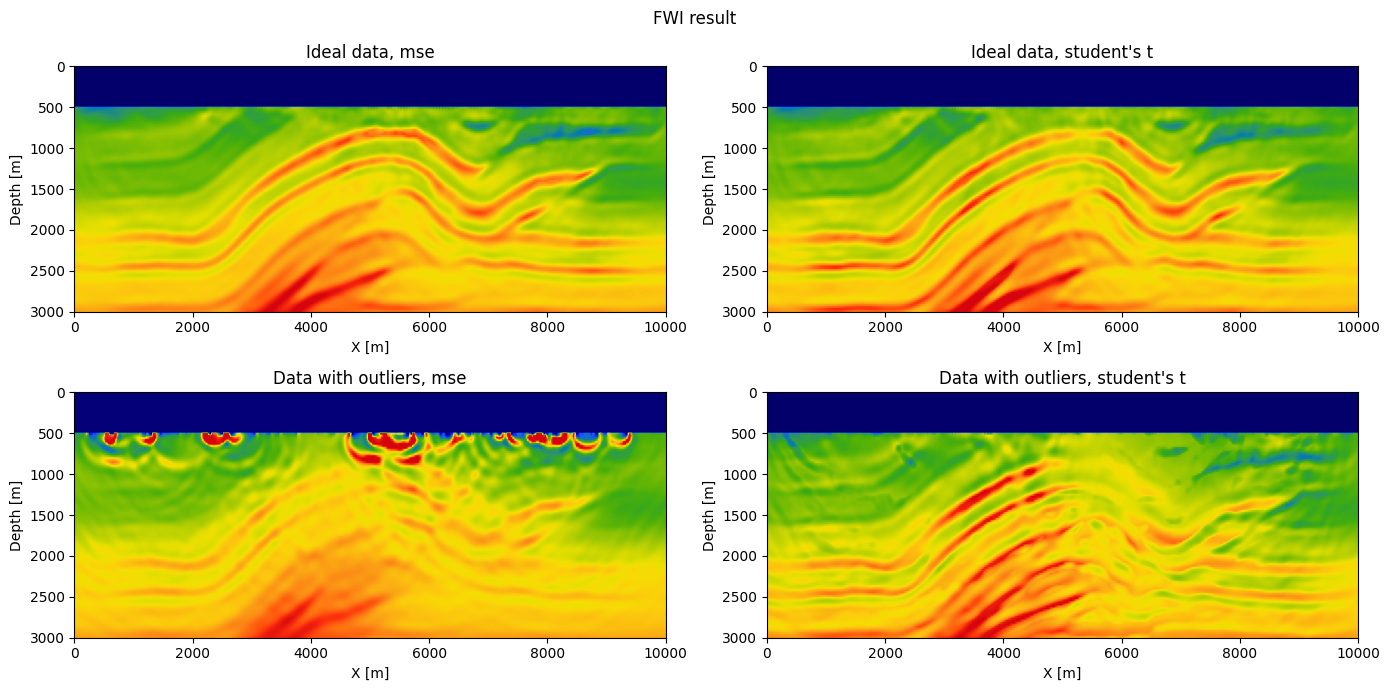

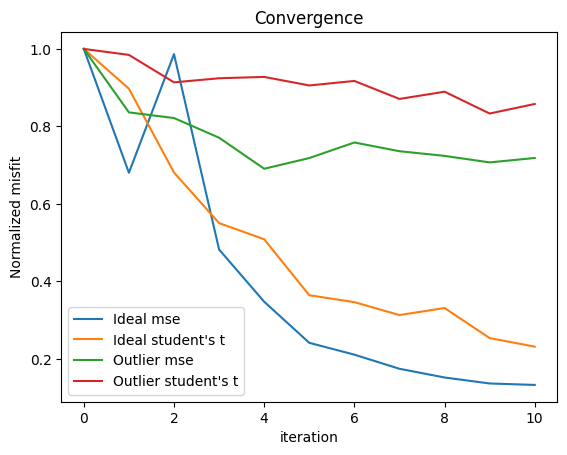

JUDI provides two predefined misfits: the standard mse \(\ell_2\) loss and the studentst misfit that correspond to the Student’s T Loss that has been shown to increase robustness against outliers in the data. The Student’s T loss is defined as

function studentst(dsyn, dobs; k=2)

fval = sum(.5 * (k + 1) * log.(1 .+ (dsyn - dobs).^2 ./ k)

adjoint_source = (k + 1) .* (dsyn - dobs) ./ (k .+ (dsyn - dobs).^2)

return fval, adjoint_source

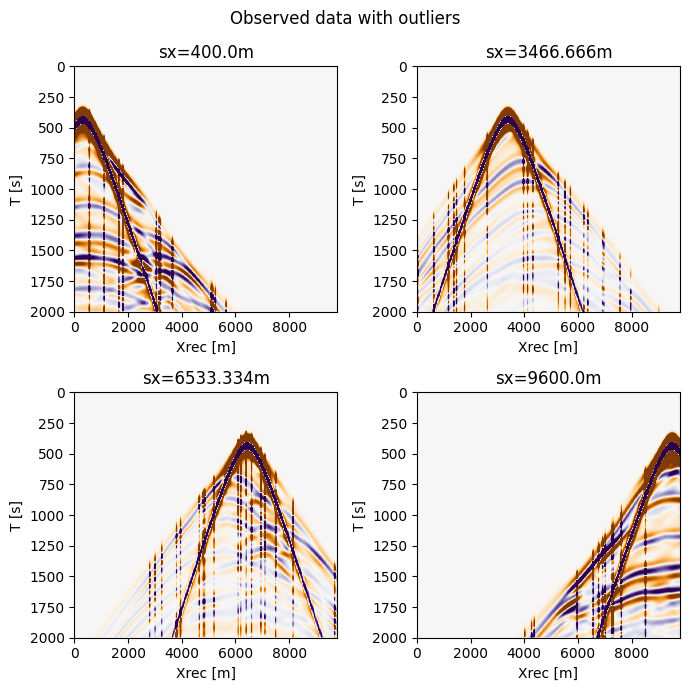

endwhere k is the number of degree of freedom (usually 1 or 2). We can see that in this case the adjoint source is pointwise normalized (in time and receiver position) which allows to mitigate outlier in the data such as incorrect amplitudes due to geophone mis-functionment. To illustrate this property, wee create an aritifical dataset with outliers to showcase the added robustness by artificially rescaling a few traces picked at random in every shot record of the dataset

d_outlier = deepcopy(d_obs)

## Add outliers to the data

for s=1:d_outlier.nsrc

# Add noise

nrec = d_outlier.geometry.nrec[s]

inds = rand(1:nrec, 20)

d_outlier.data[s][:, inds] .*= 10

endfigure(figsize=(7, 7))

suptitle("Observed data with outliers")

subplot(221)

plot_sdata(d_outlier[1]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[1]))m")

subplot(222)

plot_sdata(d_outlier[6]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[6]))m")

subplot(223)

plot_sdata(d_outlier[11]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[11]))m")

subplot(224)

plot_sdata(d_outlier[16]; new_fig=false, cmap="PuOr", name="sx=$(sx(q[16]))m")

tight_layout()

Setup inversion

With the data and misfit functions defined, we can now run the inversion. For this example, we will use SlimOptim’s spectral projected gradient, a simple gradient base optimization method were we impose bound constraints on the model. For practical considerations, we will also work with a stochastic objective function that only computes the gradient on a random subset of shots at each iteration. This method has been show to be efficient for seismic inversion in particualr with advanced algorithms with constraints or on simpler illustrative examples such as this one. We will use 8 shots per iteration (50% of the dataset) and run 20 iterations of the algorithm.

g_const = 0

function objective_function(x, d_obs, misfit=mse)

model0.m .= reshape(x, model0.n);

# fwi function value and gradient

i = randperm(d_obs.nsrc)[1:batchsize]

fval, grad = fwi_objective(model0, q[i], d_obs[i]; misfit=misfit)

# Normalize for nicer convergence

g = grad.data

# Mute water

g[:, 1:20] .= 0f0

g_const == 0 && (global g_const = 1/norm(g, Inf))

return fval, g_const .* g

endobjective_function (generic function with 2 methods)# Bound constraints based on initial velocity

mmin = .95f0 * minimum(m0) * ones(Float32, n...)

mmax = 1.1f0 * maximum(m0) * ones(Float32, n...)

# Fix water layer

mmin[:, 1:20] .= m0[1,1]

mmax[:, 1:20] .= m0[1,1]

# Bound projection

proj(x) = reshape(median([vec(mmin) vec(x) vec(mmax)]; dims=2), model0.n)proj (generic function with 1 method)batchsize = 8

niter = 10

# Setup SPG

options = spg_options(verbose=3, maxIter=niter, memory=3)

# Compare l2 with students t on ideal data

ϕmse_ideal = x->objective_function(x, d_obs)

ϕst_ideal = x->objective_function(x, d_obs, studentst)

# Compare l2 with students t on the data with outliers

ϕmse_out = x->objective_function(x, d_outlier)

ϕst_out = x->objective_function(x, d_outlier, studentst)#7 (generic function with 1 method)# Perform the inversion

g_const = 0

solmse_ideal = spg(ϕmse_ideal, copy(m0), proj, options)

g_const = 0

solst_ideal = spg(ϕst_ideal, copy(m0), proj, options)

g_const = 0

solmse_out = spg(ϕmse_out, copy(m0), proj, options)

g_const = 0

solst_out = spg(ϕst_out, copy(m0), proj, options)┌ Warning: Deprecated model.n, use size(model)

│ caller = ip:0x0

└ @ Core :-1

Building forward operator

Operator `forward` ran in 0.08 s

Building adjoint born operator

Operator `gradient` ran in 0.10 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.25 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.32 s

Operator `gradient` ran in 0.21 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.10 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.22 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.10 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.25 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.25 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.23 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.23 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.22 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.34 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.31 s

Operator `gradient` ran in 0.10 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.24 s

Operator `gradient` ran in 0.10 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.21 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.28 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.31 s

Operator `gradient` ran in 0.10 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.12 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.22 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.30 s

Operator `gradient` ran in 0.23 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.28 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.38 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.29 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.22 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.22 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.19 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.23 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.25 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.24 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.22 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.21 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.24 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.23 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.22 s

Operator `forward` ran in 0.30 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.19 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.22 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.23 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.22 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.27 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.25 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.26 s

Operator `forward` ran in 0.28 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.23 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.13 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.17 s

Operator `forward` ran in 0.21 s

Operator `gradient` ran in 0.11 s

Operator `forward` ran in 0.19 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.17 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.24 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.13 s

Operator `gradient` ran in 0.15 s

Operator `forward` ran in 0.18 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.16 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.18 s

Operator `forward` ran in 0.15 s

Operator `gradient` ran in 0.14 s

Operator `forward` ran in 0.23 s

Operator `gradient` ran in 0.12 s

Operator `forward` ran in 0.14 s

Operator `gradient` ran in 0.16 s

Operator `forward` ran in 0.20 s

Operator `gradient` ran in 0.13 sRunning SPG...

Number of objective function to store: 3

Using spectral projection : true

Maximum number of iterations: 10

SPG optimality tolerance: 1.00e-10

SPG progress tolerance: 1.00e-10

Line search: BackTracking{Float32, Int64}

Iteration FunEvals GradEvals Projections Step Length alpha Function Val Opt Cond

0 0 0 0 0.00000e+00 0.00000e+00 2.85864e+05 4.26022e-01

1 2 2 6 1.00000e+00 4.44444e-02 1.94472e+05 4.20656e-01

2 4 4 9 1.00000e+00 3.01965e-02 2.82033e+05 4.51937e-01

3 6 6 12 1.00000e+00 1.81715e-02 1.37834e+05 4.38780e-01

4 8 8 15 1.00000e+00 2.18833e-02 9.92995e+04 4.25244e-01

5 10 10 18 1.00000e+00 1.64228e-02 6.89717e+04 4.15275e-01

6 12 12 21 1.00000e+00 1.05933e-02 6.02415e+04 1.99492e-01

7 14 14 24 1.00000e+00 8.04366e-03 4.98149e+04 4.05012e-01

8 16 16 27 1.00000e+00 7.96887e-03 4.33893e+04 2.28679e-01

9 18 18 30 1.00000e+00 1.03554e-02 3.89754e+04 4.11567e-01

10 20 20 33 1.00000e+00 1.01486e-02 3.79055e+04 2.55810e-01

Running SPG...

Number of objective function to store: 3

Using spectral projection : true

Maximum number of iterations: 10

SPG optimality tolerance: 1.00e-10

SPG progress tolerance: 1.00e-10

Line search: BackTracking{Float32, Int64}

Iteration FunEvals GradEvals Projections Step Length alpha Function Val Opt Cond

0 0 0 0 0.00000e+00 0.00000e+00 2.40584e+05 4.27019e-01

1 2 2 6 1.00000e+00 4.44444e-02 2.15859e+05 4.21937e-01

2 5 5 9 1.00000e-01 2.95728e-02 1.63854e+05 4.15225e-01

3 7 7 12 1.00000e+00 9.97828e-03 1.32403e+05 4.51937e-01

4 9 9 15 1.00000e+00 6.52763e-03 1.22370e+05 4.14880e-01

5 11 11 18 1.00000e+00 4.91783e-03 8.75994e+04 4.48727e-01

6 13 13 21 1.00000e+00 4.80716e-03 8.33152e+04 4.17436e-01

7 15 15 24 1.00000e+00 5.27185e-03 7.52859e+04 4.22962e-01

8 17 17 27 1.00000e+00 7.61779e-03 7.96640e+04 3.93036e-01

9 19 19 30 1.00000e+00 6.31348e-03 6.09902e+04 4.46877e-01

10 21 21 33 1.00000e+00 3.74264e-03 5.56816e+04 3.77964e-01

Running SPG...